Hey, Siri, did my dad take his medicine?

UNC Charlotte AI tech breaks ground, bridges oceans in real-time health care analysis

By Schaefer Edwards

Photos by Amy Hart

Children depend on their parents to keep them out of harm’s way. Fast forward a few decades; as parents age, adult children often feel the responsibility to return the favor to make sure their elderly loved ones live safely and healthily while aging.

The shift from carefree child to concerned adult isn’t easy, even for those lucky enough to live in close proximity to their parents. For the millions of people who are separated from their parents by several hundred miles — or even oceans and hemispheres — staying connected is a major challenge.

Srijan Das, assistant professor of computer science in UNC Charlotte’s College of Computing and Informatics, has spent years researching how to program computers to analyze video information and quickly identify particular actions and behaviors of people. His aim is to use technology to help older people like his parents in India live longer, healthier lives, and reassure adult children that their loved ones are thriving while staying independent.

“It’s been eight years since I left India, and my parents are elderly. I call them every day because I want to understand how they are doing,” Das said. “This work isn’t just connected to elderly people themselves. It’s connected to their sons and daughters as well.”

From early in his academic career, Das found fascinating the idea of “teaching" computers to see and understand the visible world as well as, or better than, their human counterparts.

One day in 2021, his academic pursuit became personal.

WORLDS APART

While working as a postdoctoral research associate at Stony Brook University in New York, Das received a phone call no child wants: His father’s health had taken a sudden turn for the worse. His dad was quickly admitted to a local hospital, but Das and his only sister were worlds away, both living in the United States pursuing their professional goals. His mother was alone to deal with the fallout.

Luckily, Das’s father recovered fully. His doctors determined that an imbalance in potassium levels caused by medications was the reason for his health scare.

Professor Srijan Das

Now, staring at his father’s mortality, Das felt doubt creeping in. His parents had always encouraged him to follow his dreams wherever they led. Had his decision to move thousands of miles away led to a near-death experience for his dad that was preventable had Das been there in person?

“I felt very helpless,” Das said “I wasn’t there, and I just couldn’t do anything.”

CRACKING THE CODE

Das has now spent years conducting foundational computer vision research, from his time as a doctoral student at the French Institute for Research in Computer Science and Automation (INRIA) in Sophia Antipolis, France, to his stint at Stony Brook and since joining the faculty at UNC Charlotte in 2022.

In 2024, Das and his collaborators in the Charlotte Machine Learning Lab and the University’s burgeoning AI4Health Center developed an artificial intelligence-powered program that can interpret video data to discover and track human health-related behaviors with significantly more precision than other major AI models on the market.

The possibilities are as vast as they are exciting. With this new technology, computer scientists could potentially create an in-home recording system that analyzes video footage of people living in a given space that can be quickly translated by an AI assistant (think Apple’s iPhone Siri assistant or Amazon’s Alexa) to share timely, highly detailed health information in response to simple questions.

For example, physicians would be able to ask for evidence of tiny changes in a person’s gait or other movements to allow early detection of degenerative conditions like Alzheimer’s disease or Parkinson’s disease. Caretakers could be notified instantly if a nursing home resident slips and falls in their room — any time of the day or night.

And concerned children like Das with an elderly parent living far away could ask their new AI helper “Did my dad take his medicine?” and get an answer — and peace of mind — in seconds.

INTRODUCING LLAVIDAL

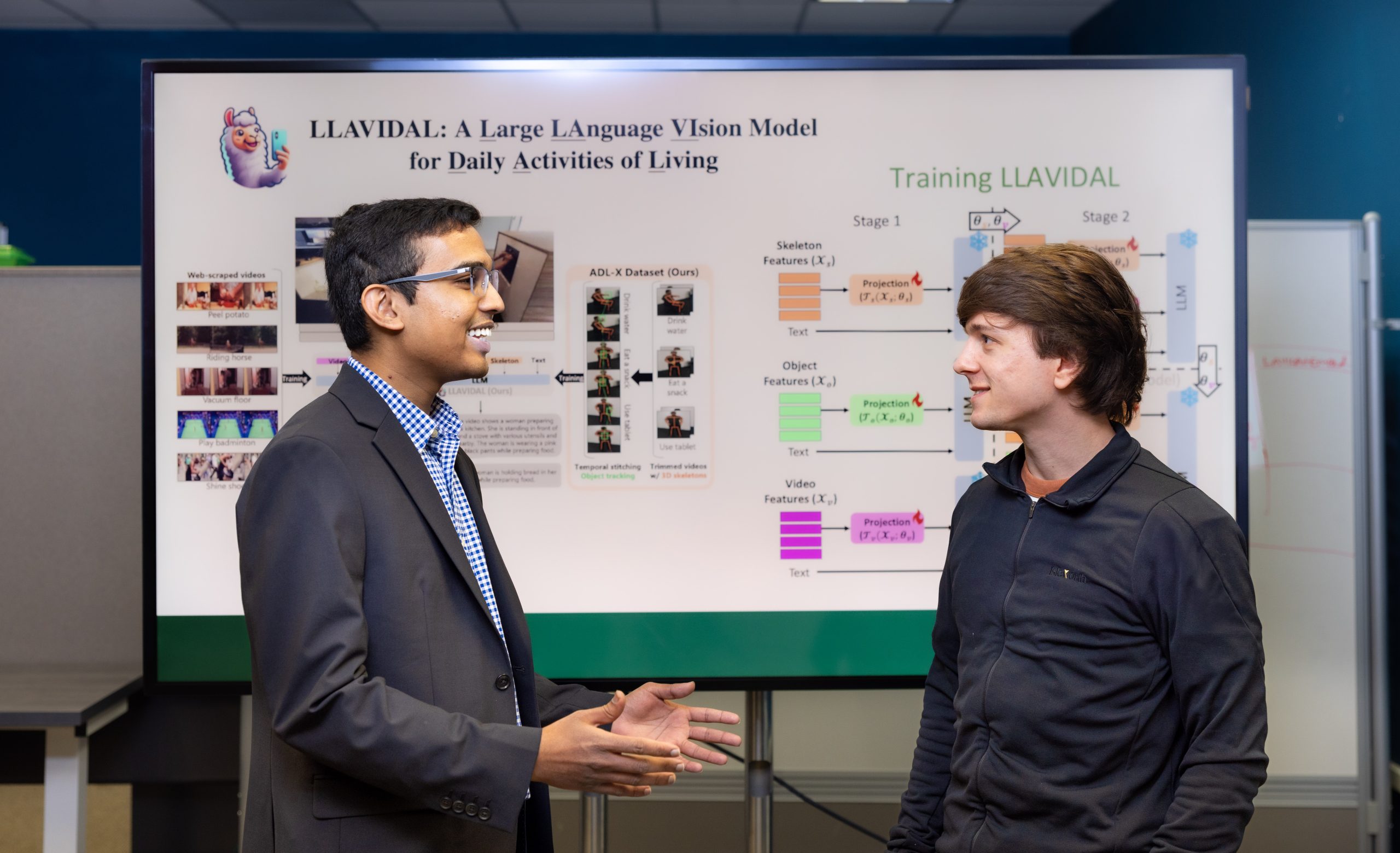

The code name of the model developed by Das and his colleagues is LLAVIDAL, short for Large Language Vision Models for Daily Activities of Living, and a clever play on the open-source AI tech that forms the model’s backbone called Vicuna. Collaborators on the project include UNC Charlotte faculty Pu Wang, an associate professor of computer science; CCI graduate students Dominick Reilly ’22, Rajatsubhra Chakraborty, Arkaprava Sinha and Manish Govind; Le Xue from the Salesforce AI team; and Francois Bremond, an INRIA and Université Côte d’Azur faculty member who advised Das during his doctoral studies.

LLAVIDAL is a large language vision model, an advanced form of the large language models that use artificial intelligence and machine learning processing to comprehend and interpret text-based data, then generate text responses to written questions in plain human language. Think back to the earliest version of ChatGPT released to the public in 2022: Type a question in the chatbot, and the model quickly generates a response based on patterns learned from vast amounts of pre-existing text, producing a written answer that’s easily understandable.

Work on LLAVIDAL began in early 2024 with a goal to incorporate video content into LLM models for health-focused applications. Soon, ChatGPT and other major LLM models like Google’s Gemini and Microsoft’s Copilot added the capability to interpret video data for the first time, evolving into large language vision models that combined LLM tech with the ability to understand visual information (the V in LLVM).

Das, doctoral student Dominick Reilly and their colleagues created the state-of-the-art LLAVIDAL model.

TECH TITANS FALL SHORT

While the major existing AI models could analyze video data, Das found obvious limits to their abilities. These limits stem from how these models have been “trained” primarily on videos that are widely accessible on the internet, like social media videos or sports highlight videos, with subjects typically framed perfectly with the camera focused directly on them.

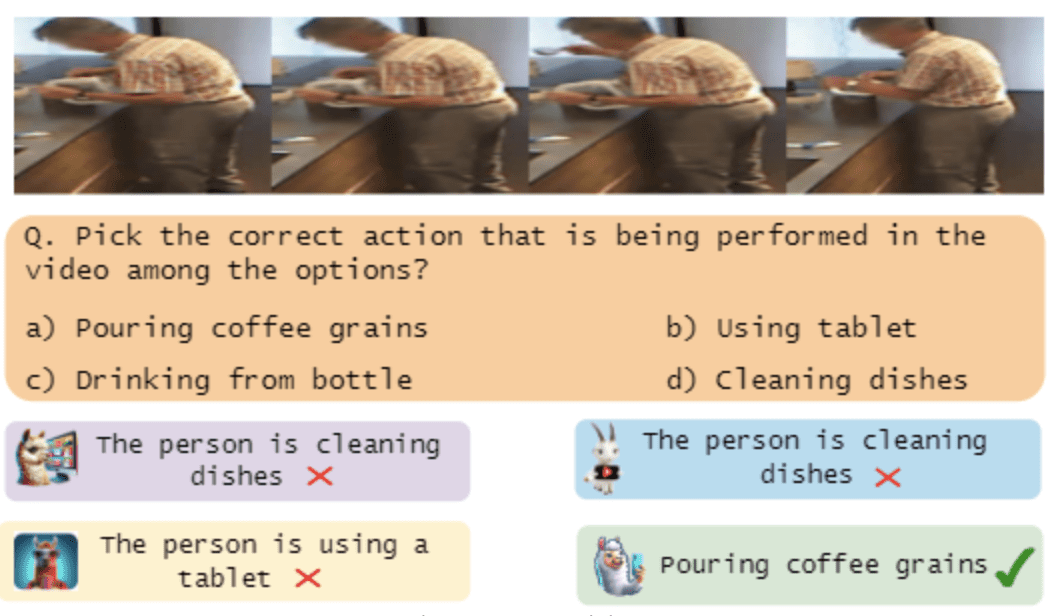

However, when these models are asked to interpret videos of more mundane activities of daily life, especially when the subject may not be perfectly in frame and where they’re doing something highly specific like slicing bread, making coffee or getting up from a chair, Das found the results were usually inaccurate.

Das realized that the latter style of video is what a large language vision model would need to understand to answer questions about behaviors tied to health care (e.g., opening a pill bottle, changes in walking patterns or tripping and falling), so they specifically trained LLAVIDAL on that type of content.

Thanks to its unique training material of over 100,000 video-instruction pairs of daily living activities, LLAVIDAL consistently outperforms major LLVMs in analyzing health-related behaviors. In one example, researchers recorded a seated person who began to fall out of their chair, with the video ending just before the subject actually fell down. When LLAVIDAL analyzed the video, the model correctly determined the person would fall prior to doing so – an improvement over other major LLVM models that had incorrectly stated that the subject had already fallen.

Das and his colleagues illustrate LLAVIDAL's ability over that of other models to identify daily life activities correctly.

ONLY THE BEGINNING

Since they first published the results of their LLAVIDAL work on the open-access online research platform arXiv on June 13, 2024, Das and his team have further fine-tuned the model to better analyze video of daily life activities. The project was recently accepted for presentation at the annual Computer Vision and Pattern Recognition Conference, one of the world’s most influential computer science research conferences.

Fascinated by what the team has accomplished, multiple companies and organizations have reached out about licensing partnerships for adapting the technology to their specific needs. They’ve even heard from some of the tech industry titans whose models LLAVIDAL bested in their tests.

“I want to gain skills that allow me to provide benefits to society,”

Reilly said. “The research that we’re doing can be applied to a wide range of situations that can help people in their daily lives.”

Dominick Reilly ‘22, computer science doctoral student

For Reilly, Das’s doctoral advisee, working on LLAVIDAL was a natural extension of the computer vision research he conducted as a CCI undergraduate.

Now pursuing his Ph.D. in computer science, Reilly is thrilled to have found a project and a mentor to help him fulfill his goals. “I want to gain skills that allow me to provide benefits to society,” Reilly said. “The research that we’re doing can be applied to a wide range of situations that can help people in their daily lives.”

From protecting the elderly to making life easier for beleaguered health workers, Reilly is proud that this work could lead to the type of positive change he wants to make possible.

“The health care workforce is already kind of strained, and I think countries all over the world have these aging populations and not enough people to take care of them and monitor them,” Reilly said. “So if we can deploy models like LLAVIDAL to monitor them, we can give those people more independence.”

Every day, Das thinks of his parents, thousands of miles and an ocean away, and how this technology could one day help aging people the world over live the fullest, healthiest possible lives, no matter the distance that separates them from their loved ones.

“This is something that we all think about. That’s the story I share with my students,” Das said. “I feel very lucky to be working on this problem.”

Schaefer Edwards is director of communications for the College of Computing and Informatics.